Advanced features and options

Test parametrization

Once you’ve defined a simple test for your component, you might want to verify that its behaviour is correct for multiple values of one or more parameters. This section explains how to parametrize your existing test cases.

In general, there are two ways of implementing parametrization. The first one requires few changes to our code from the last section. The second one (explained in the last paragraph) is quite complex, but is also the most useful for circuit model tests.

Iteration with one parameter

This is the simplest form of parametrization: the test will loop over a range of values for one parameter. We’ll continue with the disk resonator test from the previous section. Let’s parametrize the disk’s effective refractive index:

from ip_manager.testing import ComponentReferenceTest, Compare

import numpy as np

import pytest

@pytest.mark.comparisons([Compare.LayoutToGds, Compare.LayoutToXML])

class TestDiskResonator(ComponentReferenceTest):

@pytest.fixture

def component(self, disk_n_eff):

# Create and return a basic disk resonator.

from team_library.all import DiskResonator

my_disk = DiskResonator(name='my_disk')

my_disk.Layout(disk_radius=10.0)

my_disk.CircuitModel(disk_n_eff=disk_n_eff)

return my_disk

# As this refractive index is parametrized,

# all tests will automatically be run three times (once for each value).

@pytest.fixture(params=[2.98, 3.0, 3.02])

def disk_n_eff(self, request):

return request.param

# The reference file name has to differentiate between different parameter values.

@pytest.fixture

def reference_name(self, disk_n_eff):

return 'my_disk_' + str(disk_n_eff)

As component now depends on disk_n_eff which is a parameter,

the test will rerun for every different value of disk_n_eff.

For more information on parametrizing fixtures this way,

check out the pytest documentation.

As you see, we’re also specifying a custom reference file name so that it depends on n_eff.

This is necessary for parametrized reference tests to work correctly.

If all five test types are active, 15 tests will run in total using 12 reference files. Two of the three higher level tests will fail because if the refractive index is not equal to 3.0, the peaks will shift away from their expected values.

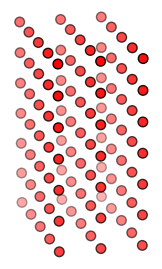

Test all combinations of multiple parameters

Parameter space (red dots indicate active tests)

Adding several parametrized fixtures in the same manner will cause pytest to run all tests for every possible combination of these parameter values. For example, the following code performs a layout reference test for 25 different instances of a component:

from ip_manager.testing import ComponentReferenceTest, Compare

import numpy as np

import pytest

@pytest.mark.comparisons([Compare.LayoutToGds, Compare.LayoutToXML])

class TestDiskResonator(ComponentReferenceTest):

@pytest.fixture

def component(self, radius, spacing):

# Create and return a basic disk resonator.

from team_library.all import DiskResonator

my_disk = DiskResonator(name='my_disk')

my_disk.Layout(disk_radius=radius,

disk_wg_width=0.5,

coupler_spacing=spacing)

return my_disk

@pytest.fixture(params=[9.0, 9.5, 10.0, 10.5, 11.0])

def radius(self, request):

return request.param

@pytest.fixture(params=[0.4, 0.5, 0.6, 0.7, 0.8])

def spacing(self, request):

return request.param

# The reference file name has to differentiate between different parameter values.

@pytest.fixture

def reference_name(self, radius, spacing):

return 'my_disk_' + str(radius) + '_' + str(spacing)

Don’t forget to run generate_reffiles.py to generate the reference files before running the tests.

It should be noted that the number of reference files and the test duration can quickly increase this way!

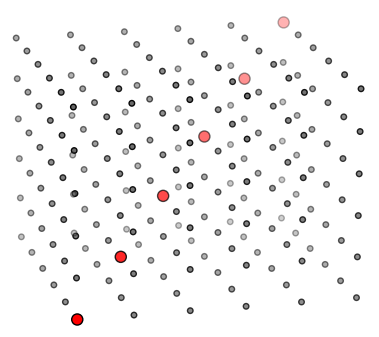

Walk once through multiple sets of parameters

Parameter space (red dots indicate active tests)

If you do not want the multi-dimensional ‘stacking’ effect as described in the previous paragraph,

you should take this approach.

Here is shown how to create sets of values that belong together,

so that the parameter sweep will stay one-dimensional.

This is especially useful if the expected results depend on the input parameters,

as is usually the case.

The following code sample tests the circuit model’s peaks

for four pairs of input parameters (disk_n_eff and disk_roundtrip_transmission),

and then compares the result to the relevant expected values:

from ip_manager.testing import CircuitModelTest

import numpy as np

import pytest

class TestDiskResonatorPeaks(CircuitModelTest):

@pytest.fixture

def component(self, disk_n_eff, disk_rt_trans):

# Create and return a basic disk resonator.

from diskres_cell import DiskResonator

my_disk = DiskResonator(name='my_disk')

my_disk.CircuitModel(disk_n_eff=disk_n_eff,

disk_roundtrip_transmission=disk_rt_trans)

return my_disk

# The reference file name has to differentiate between different parameter values.

@pytest.fixture

def reference_name(self, disk_n_eff, disk_rt_trans):

return 'my_disk_' + str(disk_n_eff) + '_' + str(disk_rt_trans)

# This test will rerun 4 times, once for every set of parameters

# (which are auto-converted to fixtures during the test).

@pytest.mark.parametrize(

'disk_n_eff, disk_rt_trans, exp_peak_wl, exp_peak_fwhm',

[( 3.00, 0.92, 1.500, 0.0035),

( 3.01, 0.88, 1.505, 0.0045),

( 3.02, 0.84, 1.510, 0.0057),

( 3.03, 0.80, 1.515, 0.0067)])

def test_disk_smatrix(self, smatrix, disk_n_eff, disk_rt_trans, exp_peak_wl, exp_peak_fwhm):

# Create a tester object

from ip_manager.testing import SMatrixTester

tester = SMatrixTester(smatrix)

# Perform general tests

assert tester.is_reciprocal(), "Component is not reciprocal"

assert tester.is_passive(), "Component is active"

# Select a terminal link

tester.select_ports('in', 'out') # from 'in' to 'out'

# Perform tests on this link

assert tester.has_peak_at(centre=exp_peak_wl, fwhm=exp_peak_fwhm), "No peak at {} um".format(exp_peak_wl)

assert tester.powerdB_at(exp_peak_wl) < -6.0, "Peak is not deep enough"

The fixtures are now not explicitly declared,

but instead they are inferred from the parameter sets given right above the test method.

This is why component can use disk_n_eff and disk_rt_trans without problems.

For more information on parametrizing tests this way,

check out the pytest documentation.

In the above example, only three of the four tests will pass. The last one fails because the suppression at 1.515 micrometres is not strong enough (5 dB rather than > 6 dB).

Note that this method is only valid for usage with

CircuitModelTest!

Using other test types would be more difficult as they have internal test methods,

where you can’t prefix a @pytest.mark.parametrize decorator.

The file can be downloaded here: test_diskresonator_smatrix.py

Specify which tests to run

The pytest.ini configuration file also allows you to choose

which test files and methods are used, also called test discovery.

By default, this pattern is followed:

Files must match

*_test.pyortest_*.py,Classes must match

Test*,Functions must match

test*.

All test methods matching these three conditions are then included. We can override this behaviour and choose our own set of files as in this example:

[pytest]

addopts = '--html=report.html' '--self-contained-html'

python_files = diskres_test.py another_test.py

python_classes = Test* ; default

python_functions = test* ; default

Now you can simply run pytest or pytest --regenerate

to run or regenerate only these two files.

For more information about test discovery, check out the pytest documentation.

Use a different path for the reference or temporary files

Customizing the file locations and names for the ip_manager.testing.ComponentReferenceTest is done by adding these fixtures:

reference_name: basename (without extension0 of the reference files. Defaults to the name of the component.ComponentReferenceTest```will add a suffix (e.g. ``_layout) and an extension (.gds,.xmletc).reference_folder_name: name of folder for the known-good reference files, relative to the location of the test file By default this isref.temp_folder_name: name of the folder for temporary files with the current layout, netlist, smatrix, and so forth. By default the same folder asreference_folder_name.

The files are always stored in a subfolder of the folder where test file is located. IP Manager will make the necessary folders if they don’t exist.

Example of changing the reference file name and location:

# Optional fixture specifying the relative folder path into which reference files are stored

@pytest.fixture

def reference_folder_name(self):

return 'my_refs' # default is 'ref'

# Optional fixture specifying the first part of the reference file names

@pytest.fixture

def reference_name(self):

return 'cell_abc' # default is component name

For parametrized reference tests, as demonstrated in Test parametrization, the file name should take different values for different parameters so that no files overwrite each other or get wrongly compared.

Example of changing the relative location where temporary files (with the current layout, netlist, smatrix, …) are saved:

# Optional fixture specifying the relative folder path into which temporary files are stored

@pytest.fixture

def temp_folder_name(self):

return 'temp_files' # default is sames as `reference_name`

Specify timestamp in GDS files

The default timestamp is set to 1/1/1970, such that the GDS reference files stay equivalent when regenerated. This value can be changed by specifying the ref_time fixture.

import datetime

@pytest.mark.comparisons([Compare.GdsToGds, Compare.LayoutToXML, Compare.NetlistToXML, Compare.SMatrix])

class DiskResonatorTest(ComponentReferenceTest):

@pytest.fixture

def component(self):

# Create and return a basic disk resonator

from team_library.all import DiskResonator

my_disk = DiskResonator(name="my_disk")

return my_disk

# Optional fixture specifying the timestamp in the reference GDS files

@pytest.fixture

def ref_time(self):

return datetime.datetime(2000, 1, 1)

Exact polygon comparison

Layout drawings can be equivalent to a designer even if vertices are in a different order or complex shapes are cut in a different way. For that reason, IP Manager by default performs a “XOR” test. When we do a logical XOR operation between shapes on each layer of the layout reference file and the actual layout, the result will be a 1 if the shapes do not perfectly overlap and a 0 if the shapes perfectly overlap.

In some cases you may want to compare polygons more completely, for instance:

To test if cut faces are still exactly the same after boolean operations

To test the order of vertices in a polygon is still the same (starting vertex and direction).

To disable the XOR-based testing, ip_manager.testing.ComponentReferenceTest supports a xor_equivalence fixture:

@pytest.mark.comparisons([Compare.GdsToGds, Compare.LayoutToXML, Compare.NetlistToXML, Compare.SMatrix])

class TestDiskResonatorReference(ComponentReferenceTest):

@pytest.fixture

def component(self):

# Create and return a basic disk resonator

from team_library.all import DiskResonator

my_disk = DiskResonator(name="my_disk")

return my_disk

@pytest.fixture

def xor_equivalence(self):

# By setting xor equivalence to False, we do a exact comparison, detecting changes in the order of vertices / cut faces (this is often not needed)

return False

@pytest.fixture

def wavelengths(self):

return np.arange(1.3, 1.8, 0.0001)

Layout comparison with a tolerance

There are situations where layout drawings slightly differ from each other:

it could be that certain shapes snaps to a different grid point due to layout modifications,

or it could be that an update in the dependencies of IPKISS cause such small differences (e.g. update of numpy that changes the optimizations being used for certain numeric functions, which propagates to a different end result).

If this is expected, and acceptable by the user, IP Manager provides an option to compare two gds references within a certain tolerance.

ip_manager.testing.ComponentReferenceTest supports a xor_tolerance fixture that configures the tolerated discrepancy between the two underlying shapes.

The test algorithm first performs a “XOR” difference between combined shapes on each layer, and then shrinks those shapes.

If the shrunk shapes, which are defined as the Minkowski difference of the initial shape with a circle with radius equal to half of the absolute value of xor_tolerance (see shapely buffer <https://shapely.readthedocs.io/en/latest/reference/shapely.buffer.html>), are empty, the compared layouts are considered equivalent.

To enable the XOR-based testing with a tolerance, xor_tolerance can be specified as a fixture within the GdsToGds ComponentReferenceTest:

@pytest.mark.comparisons([Compare.GdsToGds, Compare.LayoutToXML, Compare.NetlistToXML, Compare.SMatrix])

class TestDiskResonatorReference(ComponentReferenceTest):

@pytest.fixture

def component(self):

# Create and return a basic disk resonator

from team_library.all import DiskResonatorV2

my_disk = DiskResonator(name="my_disk_v2")

return my_disk

@pytest.fixture

def xor_tolerance(self):

# tolerance specified in microns

return 1e-3

S-model testing

The scatter matrix of a component can be verified in two ways: either by comparing it to a reference file, or by manually writing your own checks. The scatter matrix reference test is a bit special as it is not as strict as the other three reference tests. Small deviations in the scatter matrix as a function of the wavelength are allowed, as long as the relative energy of this error is below a certain value. This similarity tolerance can be modified with the following fixture:

@pytest.mark.comparisons([Compare.GdsToGds, Compare.LayoutToXML, Compare.NetlistToXML, Compare.SMatrix])

class DiskResonatorTest(ComponentReferenceTest):

@pytest.fixture

def component(self):

# Create and return a basic disk resonator

from team_library.all import DiskResonator

my_disk = DiskResonator(name="my_disk")

return my_disk

# Optional fixture specifying the maximum relative error for scatter matrix comparison tests

@pytest.fixture

def similarity_tolerance(self):

return 0.01 # default is 0.02

The value 0.0 means strict equality is necessary,

and e.g. 0.1 would allow for sizable deviations.

The similarity (= one minus the error) is always printed in the test report

if the S-matrices aren’t strictly equal.

To write custom scatter matrix tests that don’t rely on reference files, see Helper classes.

Here we give such an example of using the SMatrixTester class. More specifically, we will create fixtures for a directional coupler component and test its scatter matrix for expected phase shifts at specific ports. We define an absolute tolerance (atol) to allow for small deviations in the phase values. Note that the phase should be in rad.

import si_fab.all as pdk

from ip_manager.testing import SMatrixTester

import numpy as np

import pytest

# Create fixtures for component and wavelengths

@pytest.fixture

def dc():

return pdk.SiDirectionalCouplerS(straight_length=8.0)

@pytest.fixture

def wavelengths():

return np.linspace(1.5, 1.6, 101)

@pytest.fixture

def smatrix(dc, wavelengths):

dc_cm = dc.CircuitModel()

return dc_cm.get_smatrix(wavelengths=wavelengths)

@pytest.fixture

def tester(smatrix):

return SMatrixTester(smatrix, print_peaks=False)

def test_phase_shift(tester):

"""

Test that the directional coupler has the correct phase relationship between the cross and bar ports,

by comparing the expected phase values with simulated values using the "phase" metric and checks if

the results are within an absolute tolerance of 0.15. Note that ports can also be specified by their integer indices instead of the names.

"""

expected_phase_values = {

("out2", "in1"): np.pi / 2, # Phase shift from port "in1" to port "out2"

("out1", "in2"): np.pi / 2, # Phase shift from port in2 to port out1

("in1", "in2"): 0, # Phase shift from port in2 to port in1

}

# Test Phase Shift

phase_test_result = tester.test_port_comparison(expected_phase_values, metric="phase", atol=0.01)

assert phase_test_result

The test checks whether the phase shift values match the expected values within the specified tolerance, ensuring the correctness of the scatter matrix calculations for the directional coupler component.